Google Webmaster Tools removes monthly Indexing Request quota

Google Webmaster Tools are an essential part of anyone workingpublishing on the web. The system provides insight into how the content is being indexed, statistics on searches as well as help in debugging issues. The Fetch as Google -feature allows requesting an index update.

The Fetch as Google -feature is very valuable as you can use it to push content to the web searches near realtime in 2018. This means that if you are in a competitive business like online news publishing, you can have an edge if you have integrated this as a "ping" mechanism in your CMS implementation.

For reasonable amount of publishing using the Single URL submit manually works as well. In fact up until now large scale publishers wil have hit the limitations of Fetch as Google. Likely for resource reasons the feature has had a quota of 1000 requests per month for each verified account. This can become an issue if you've got a large publishing team or automated content.

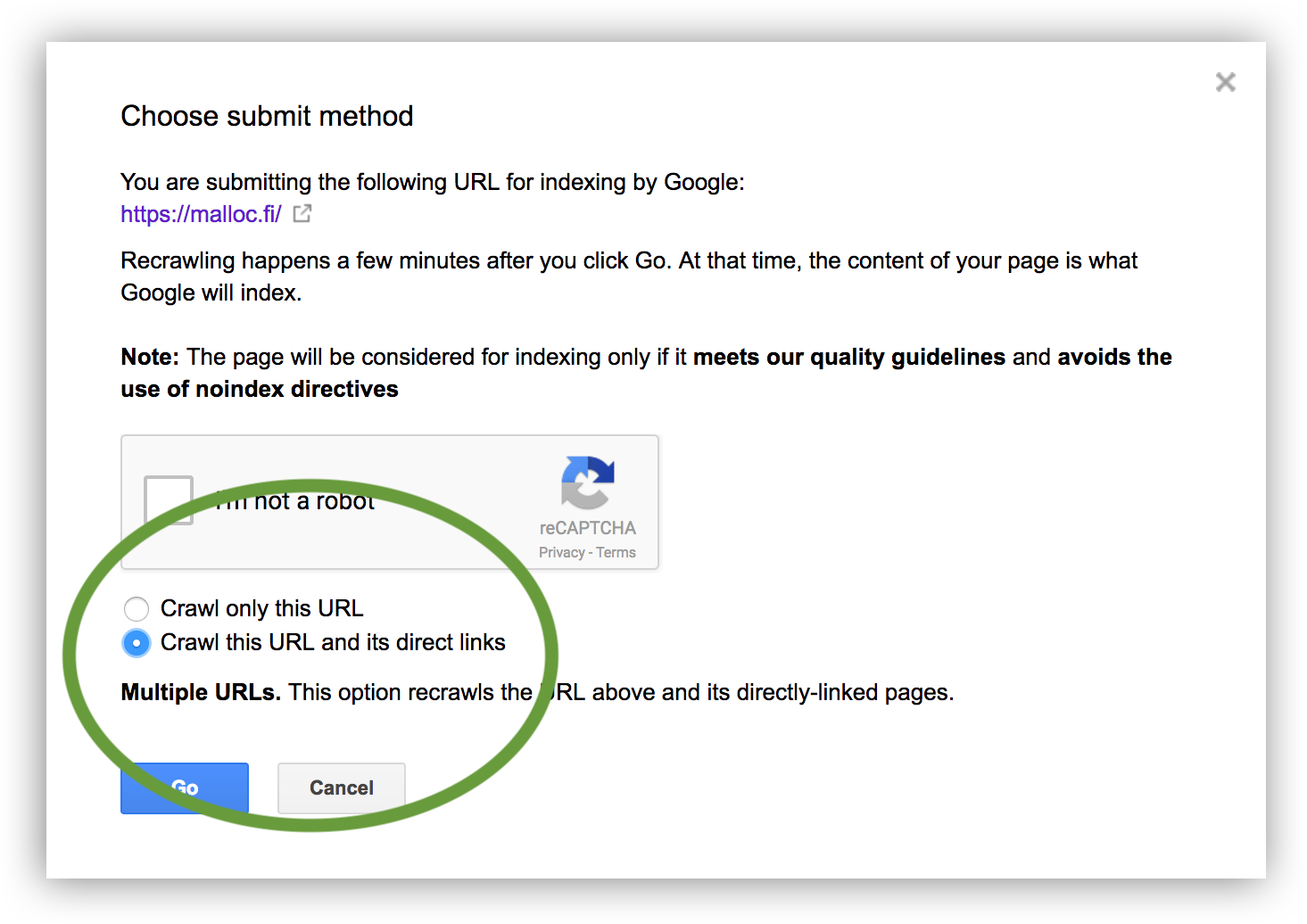

In addition to the 1000 requests, there has been a "premium" feature, Multiple URLs, that allows fetching a page and all links that page has. This has been a good for doing mass updates of listing pages, etc. The monthly quota for this, however has been a measily 10 requests per month.

Tales of 1010 requests are over — Unlimited wishes

In February 2018 Google has been rolling out a completely revamped version of it's Webmaster Tools dashboard. The new version offers improvements like a 16 Month search statistics data (was 90 days), but it is still lacking features and both versions will likely coexist for quite a while. I've also experienced minor glitches like random An error has occurred -messages when making indexing requests.

Most of development efforts likely are put towards the new version, but improvements seem to come to the old(er) version. Probably because both share the same infrastructure. The big news here is that both Crawl only this URL and Crawl this URL and its direct links no longer have a montly hard limit.

Excessive use will likely trigger bot verifications, but it does take out skimping on indexing requests based on an artificial limitations. Ten requests a month certainly felt neanderthal in the age where robots are taking over repetitive tasks: My Job Went to GoogleBot (and all I wrote was this lousy article)

Happy indexing,

-- Jani Tarvainen, 19/02/2018

P.S. If you are into content creation and search indexing, I recommend checking out the Webmaster Tools from Baidu, Bing, and Yandex as well. They're pretty good nowadays, you'll be surprised.